From Review to Resolution: Prediction Markets vs. Peer Review

Peer Review in Crisis, Markets Rising

Scientific knowledge validation is entering a period of profound transformation. Peer review, long considered the gold standard, is increasingly seen as a legacy mechanism: opaque, slow, and misaligned with the tempo of modern science. This essay proposes a radical alternative: AI-resolved prediction markets that quantify belief in scientific claims through decentralized wagering mechanisms. In this system, legitimacy is reframed not as a static verdict but as a dynamically negotiated probability.

Peer Review in Crisis, Markets Rising

Historically, the peer-reviewed paper served as science’s epistemic checkpoint. But its limitations are now manifest: reproducibility failures, entrenched conservatism, and delays incompatible with today’s information flows. The peer review process, intended to filter rigor from noise, often becomes a bottleneck that stifles heterodoxy and slows innovation. In contrast, prediction markets offer a real-time epistemic ledger, where the credibility of claims is priced dynamically, with AI assessing incoming evidence to resolve outcomes. In this system, scientific trust is no longer granted ex-ante by reviewers but earned ex-post through open evaluation.

Peer Review: A Broken Gatekeeper?

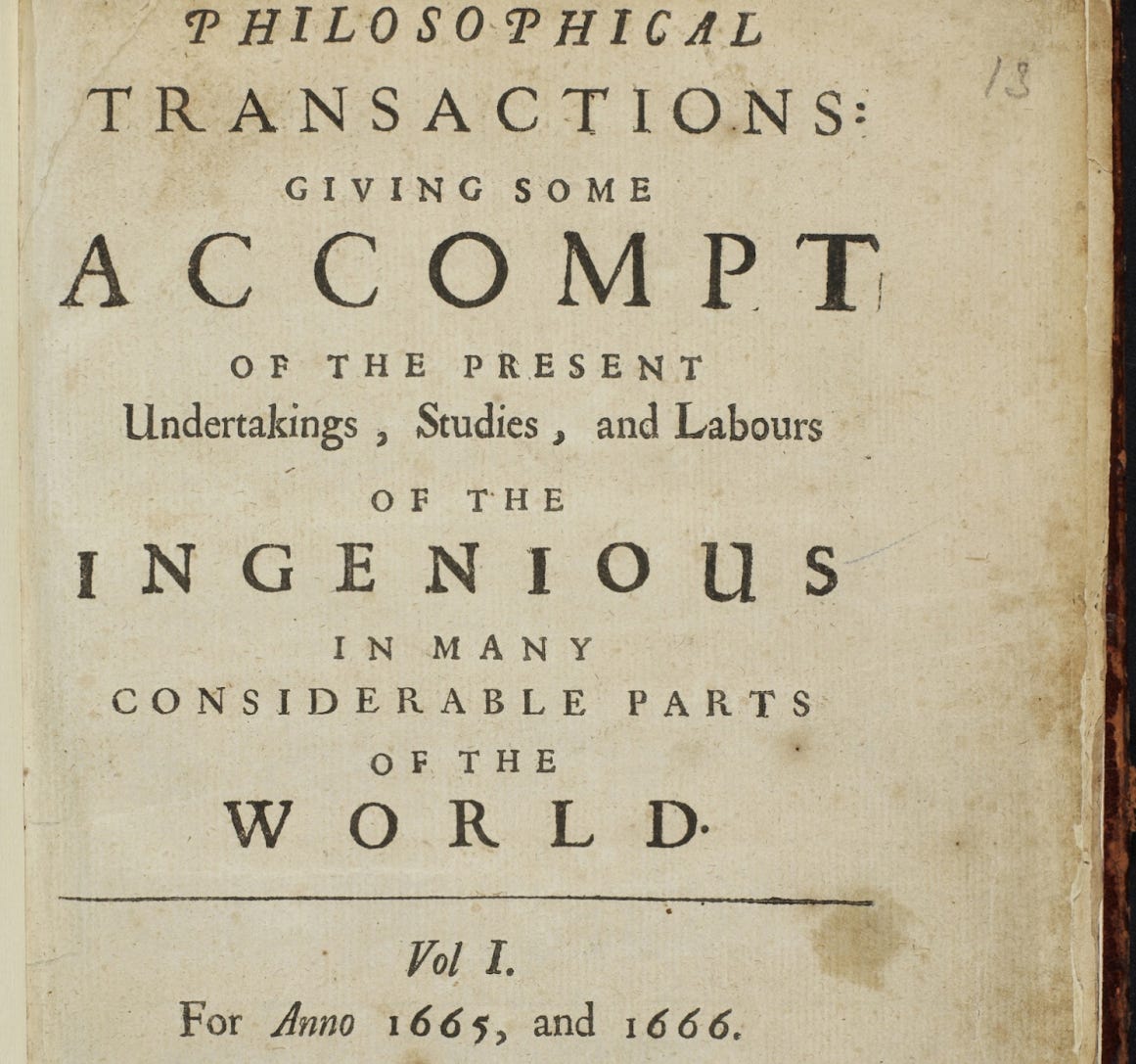

Historically, scientific validation transitioned from personal correspondence among scientists (e.g., Galileo's letters) to structured journals (initiated by Philosophical Transactions in 1665). Peer review, formalized by the Royal Society in the 18th century, aimed to democratize knowledge but inadvertently entrenched biases. The Enlightenment transformed these practices, introducing the notion of rational critique, but also laid the groundwork for hierarchical expertise.

Recent critiques (Bright & Heesen, 2021; Tennant & Ross-Hellauer, 2020) emphasize systemic issues, including conservatism, nepotism, and the slow pace of peer review. Studies have shown that peer review can be swayed by reputation, reinforcing the Matthew Effect, where famous scientists receive a free pass while unknowns are scrutinized. Bias against disruptive ideas is common, and reviewers often favor studies that confirm existing beliefs.

The reproducibility crisis has laid these problems bare. From psychology to biomedicine, landmark studies have failed to replicate, calling into question the reliability of peer-reviewed findings. A Nature survey found that 70% of scientists have tried and failed to reproduce another researcher’s work. This has triggered calls for reform: from open peer review to mandatory replication, but the fundamental problem remains: peer review is a slow, subjective bottleneck.

Prediction Markets: From Gambling to Epistemic Tools

Prediction markets invert the traditional model. Rather than conferring legitimacy through editorial gatekeeping, they reward accurate belief formation. Participants stake on the veracity of claims. Market prices then serve as probabilistic signals of confidence. In Dreber et al. (2015), such markets more accurately forecasted replication outcomes than field experts, highlighting the power of incentives and distributed judgment.

This reframes epistemic labor: truth becomes a traded asset, and correctness, a source of financial and reputational capital. Participants aligned with accuracy thrive; those who misprice truth are disadvantaged. In doing so, markets operationalize Popperian falsifiability and Bayesian updating in a continuous, data-driven form.

AI: The Oracle of Science

AI augments this ecosystem as a neutral oracle. Already capable of reading and critiquing scientific papers, large language models detect statistical inconsistencies, assess argument quality, and integrate evidence across domains. In prediction markets, AI can monitor the literature, evaluate emerging data, and resolve claims with speed and scale unmatchable by human reviewers. The result: an epistemic infrastructure where claims are not merely published, they are stress-tested.

Traditional Peer Review vs. AI-Resolved Market-Based Validation

Advantages of AI-Resolved Markets

🟡 Real-Time Vetting

An AI-resolved market could accelerate the vetting of scientific claims. Rather than waiting months for two or three reviewers to give a thumbs-up, a paper’s core claims start being evaluated immediately by a multitude of minds once it is public. The collective intelligence can quickly identify weak points. If there’s a flaw in the methodology, many traders may spot it and bet against the claim’s success, sending a quick signal of doubt. If the claim is truly groundbreaking but sound, traders optimistic about its truth can bet in favor, giving early positive feedback. This market responsiveness could help triage research in almost real-time, focusing attention on results that appear credible while marking those that deserve skepticism. In this way, the model addresses the widely acknowledged bottleneck in peer review, where limited reviewer capacity delays dissemination.

🟡 Beyond Binary Review

Unlike peer review’s binary decision, markets yield a nuanced probability. This aligns with the inherently probabilistic nature of scientific knowledge (which is almost never absolute). A study might come out with, say, a 60% market-implied chance of being correct. This is valuable information for other scientists and policymakers, arguably more informative than the fact that it passed peer review (which is not a guarantee of truth, only that it cleared a certain bar). Over time, an evolving market price can capture the trajectory of a research claim as new evidence emerges, effectively providing a real-time meta-analysis. The AI’s role in resolving the market also means that as soon as sufficient evidence is available, the community gets closure (rather than debates dragging on). If well-calibrated, such probabilities could become a new form of scientific credential, supplementing or even replacing traditional metrics like p-values or citation counts.

🟡 Paying for Rigor

In the market model, getting it right pays. This creates direct financial incentives for behaviors that the current system struggles to reward. For instance, performing replication studies or thorough robustness checks is time-consuming and not always rewarded with publications. But in a prediction market, a researcher who independently replicates someone’s experiment and finds a discrepancy could use that knowledge to place profitable bets against the original claim. This monetary reward for replication efforts could encourage more scientists to engage in validation work, thus improving the overall rigor of science. Similarly, original authors have a motive to be more careful and transparent. The market thus imposes a form of accountability that is currently lacking in many fields. This dynamic aligns with calls for greater accountability in research, essentially internalizing the cost of being wrong.

🟡 Epistemic Access for All

By opening evaluation to a broader community, AI-resolved markets could democratize scientific validation. Researchers from less elite institutions, or independent scholars, often lack a voice in the traditional peer review hierarchy. In a market, anyone with insight can influence the outcome. For example, a talented early-career scientist who notices a problem in a famous professor’s paper can bet against it and, if correct, not only earn a reward but demonstrate their evaluative acumen. Over time, reputation systems might evolve on these platforms (e.g., “X has a track record of accurate predictions in organic chemistry claims”), potentially offering an alternative route to recognition besides journal publications. Moreover, AI agents in the market might act as equalizers: if the AI can independently assess evidence, it doesn’t care about the social status of who produced that evidence. This could reduce the “halo effect” where big names get a free pass in peer review. The decentralized nature of such markets also means no single journal or publisher holds authority, which could mitigate the gatekeeping power that a few top journals currently wield in setting research agendas.

🟡 Cross-Domain Synthesis

An AI-resolved market, by design, would integrate information from many sources. The AI oracle could be continuously ingesting new data, publications, and even raw experimental results. In doing so, it breaks the siloed nature of peer review (where each paper is judged mostly on its own, by a small set of eyes). For example, if three different preprints all address the same hypothesis with varying outcomes, the AI can synthesize these signals when resolving bets, and traders can take into account all known studies. This holistic integration might reduce duplication and foster collaboration: researchers working on similar questions could essentially “meet in the market” and become aware of each other’s work more rapidly than if they encountered it only months later in journals.

Potential Issues

🟡Loss of Feedback and Mentorship: Peer review provides constructive criticism and mentorship that help improve research and train new scientists. Markets offer only numerical signals, lacking the explanatory dialogue crucial to learning.

🟡Public Perception: Peer-reviewed publications carry an implicit expert endorsement. Replacing them with AI-set probabilities might seem like gambling to some, undermining public trust and regulatory acceptance. Without transparency and explanations, markets may struggle to gain authority.

🟡Resolution Ambiguities: Scientific claims are often ambiguous. An AI oracle applying rigid criteria may prematurely reject valid work or validate flawed results. Human oversight may still be needed to interpret complex or evolving evidence.

🟡Market Expertise: Markets need active, informed participants, but expert time is limited. Thin or biased markets could misprice claims, especially in niche fields. Even AI traders may introduce systematic errors or herding effects.

🟡Ethical and Legal Concerns: Introducing financial incentives risks manipulation. Legal frameworks may classify such markets as gambling or securities, limiting scalability unless designed with safeguards like non-monetary tokens.

The benefits (speed, transparency, scalability) are tangible. Yet the costs (loss of narrative explanation, risk of opacity, potential manipulation) are non-trivial. The future of epistemic validation may lie not in replacement, but synthesis.

Rethinking Epistemic Authority

Under peer review, authority is centralized: journals confer legitimacy, and a credentialed few dictate acceptance. Markets decentralize this function, distributing epistemic power among many actors. Credibility is now a function of demonstrated predictive success rather than institutional prestige.

This epistemological shift aligns with broader trends toward algorithmic governance. Like credit scores or algorithmic sentencing, prediction markets convert complex human judgments into quantifiable metrics. While this enhances scalability, it challenges the norm of reasoned justification. Without explanation, market-based authority may resemble technocratic fiat rather than democratic discourse. To mitigate this, AI agents could generate interpretative reports (analogous to judicial opinions) explaining resolution decisions and tracing evidence paths. This would preserve transparency and justify epistemic conclusions to both experts and the public.

New hierarchies may also emerge. The best predictors (regardless of academic status) could become recognized as “meta-experts,” distinguished not by their publications but by their calibration. This echoes Goldman’s social epistemology: veritistic value accrues not only through discovery but through accurate belief filtering.

Gatekeeping Reimagined

The most radical implication is the displacement of journals as gatekeepers. In a market-first system, all claims are initially validatable. The open marketplace determines credibility post-publication, not pre. This promotes epistemic inclusivity: early-career researchers, independent scientists, and marginalized voices gain entry without needing elite endorsement.

Yet with openness comes risk: market manipulation, information asymmetry, and strategic distortion. Just as financial markets are regulated, epistemic markets will require design constraints (circuit breakers, influence caps, transparency norms) to protect their integrity.

This model also rebalances the burden of proof. Currently, unorthodox ideas struggle to pass editorial thresholds. Markets, however, allow even minority opinions to persist as long as some are willing to back them. In the long run, successful contrarians are rewarded, fostering pluralism without abandoning empirical rigor.

The Evolving Scientific Paper

If validation shifts post-publication, the paper’s function may evolve. Rather than static PDFs, papers could become dynamic entities, continuously updated as evidence and market prices change.

Narrative remains essential. But market widgets embedded within papers could show live credibility ratings, creating a hybrid model: narrative for humans, markets for machines. Replications and negative findings, historically underpublished, would become market-relevant, increasing their epistemic and economic value. This reconfiguration of the paper mirrors shifts already underway in open science. Platforms like F1000Research and preprint servers point to a future where transparency, not gatekeeping, is the baseline.

The Episteme Model

Episteme exemplifies this future. As a decentralized, AI-driven platform for prediction markets for science, it tests the hypothesis that collective intelligence and algorithmic resolution can outperform peer review. Users stake tokens on claims, AI tracks emerging evidence to resolve outcomes. The result is an “epistemic price”, a real-time signal of credibility. Episteme neither replaces journals nor duplicates them: it provides an additional validation layer, particularly suited for high-uncertainty or high-replication-failure domains.

Reformation, Not Replacement

The scientific paper isn’t dead, but its role is shifting. AI-resolved prediction markets challenge the institutional logic of peer review, proposing a vision that is faster, fairer, and more accountable, but also riskier and potentially less humane. The optimal future likely blends both: peer review for interpretative depth, markets for empirical responsiveness. In this hybrid, science becomes not a static archive but a living, contested terrain, validated continuously, judged collectively, and encoded in protocols, not papers.

This is not science’s end, but its reinvention.

References

Al-Mousawi, Y. (2020). A brief history of peer review. F1000 Blog. https://blog.f1000.com/2020/01/31/a-brief-history-of-peer-review/

Baldwin, M. (2019). Peer review. Encyclopedia of the History of Science. https://doi.org/10.34758/7s4y-5f50

Bloom, T. (2017). Referee report for: What is open peer review? A systematic review [version 1; referees: 1 approved, 3 approved with reservations]. F1000Research, 6, 588. https://doi.org/10.12688/f1000research.11369.2

Boldt, A. (2011). Extending ArXiv.org to achieve open peer review and publishing. Journal of Scholarly Publishing, 42(2), 238–242. https://doi.org/10.3138/jsp.42.2.238

Bright, L. K., & Heesen, R. (2021). Is peer review a good idea? British Journal for the Philosophy of Science, 72(3), 635–663. https://doi.org/10.1093/bjps/axz029

Crow, J. M. (2024). Peer-replication model aims to address science’s “reproducibility crisis.” Nature.

Dreber, A., Pfeiffer, T., Almenberg, J., Isaksson, S., Wilson, B., Chen, Y., … Johannesson, M. (2015). Using prediction markets to estimate the reproducibility of scientific research. Proceedings of the National Academy of Sciences, 112(50), 15343–15347. https://doi.org/10.1073/pnas.1516179112

Ford, E. (2015). Open peer review at four STEM journals: An observational overview. F1000Research, 4, 6. https://doi.org/10.12688/f1000research.6005.2

Fyfe, A., Moxham, N., McDougall-Waters, J., & Røstvick, C. M. (2022). A history of scientific journals: Publishing at the Royal Society 1665–2015. UCL Press.

Herron, D. M. (2012). Is expert peer review obsolete? A model suggests that post-publication reader review may exceed the accuracy of traditional peer review. Surgical Endoscopy, 26(8), 2275–2280. https://doi.org/10.1007/s00464-012-2161-1

Hosking, R. (n.d.). Peer review — A historical perspective. MIT Communications Lab Blog. https://mitcommlab.mit.edu/broad/commkit/peer-review-a-historical-perspective/

In praise of peer review. (2023). Nature Materials, 22, 1047. https://doi.org/10.1038/s41563-023-01661-7

Jubb, M. (2016). Peer review: The current landscape and future trends. Learned Publishing, 29(1), 13–21. https://doi.org/10.1002/leap.1008

Jukola, S., & Visser, H. R. (2017). On “Prediction markets for science,” a reply to Thicke. Social Epistemology Review and Reply Collective, 6(11), 46–55.

Kitcher, P. (2002). Veritistic value and the project of social epistemology [Review of Knowledge in a Social World, by A. I. Goldman]. Philosophy and Phenomenological Research, 64(1), 191–198. http://www.jstor.org/stable/3071029

Merton, R. K. (1942). The ethos of science. Journal of Legal and Political Sociology, 1, 115–126. Reprinted in R. K. Merton & P. Sztomka (Eds.), Social structure and science (1996). University of Chicago Press.

Polanyi, M. (1962). The republic of science: Its political and economic theory. Minerva, 1(1), 54–73.

Rajtmajer, S., Griffin, C., Wu, J., Fraleigh, R., Balaji, L., Squicciarini, A., … Giles, C. L. (2022). A synthetic prediction market for estimating confidence in published work. arXiv. https://arxiv.org/abs/2201.06924

Ross-Hellauer, T. (2017). What is open peer review? A systematic review. F1000Research, 6, 588. https://doi.org/10.12688/f1000research.11369.2

Seringhaus, M. R., & Gerstein, M. B. (2006). The death of the scientific paper. The Scientist, 20(9), 25.

Smith, R. (2006). Peer review: A flawed process at the heart of science and journals. Journal of the Royal Society of Medicine, 99(4), 178–182. https://doi.org/10.1258/jrsm.99.4.178

Tennant, J. P., & Ross-Hellauer, T. (2020). The limitations to our understanding of peer review. Research Integrity and Peer Review, 5(6), 1–14. https://doi.org/10.1186/s41073-020-00092-1

Thicke, M. (2017). Prediction markets for science: Is the cure worse than the disease. Social Epistemology, 31(5), 451–467. https://doi.org/10.1080/02691728.2017.1352623